How can you pick the best AI tool for your research needs and what do you need to be aware of?

The Use of AI in Research Guidelines can help guide you through considerations when using AI to maintain rigorous and robust high-quality research.

For the best results when using GenAI tools you should select one that fits your research needs. Researchers should balance the tool's capabilities with any associated research and cybersecurity risks and ethical considerations.

- How can I check for errors and hallucinations in the results from AI?

- Do I know that my results are still reproducible using AI methods?

- Have I checked for bias in AI generated results? Do I know what biases may be present in the data used to train the AI model?

- Is there a way to check that the AI model I'm using hasn't plagiarised other people's work in the results it's giving me?

- Have I appropriately referenced the use of AI in my work?

- Have I checked for cybersecurity risks associated with using a specific AI tool?

Thoughts on AI use by UTS staff

Here are a range of articles and documents that UTS researchers and professional staff found useful or interesting when considering AI use that they thought should be shared with the wider community to spark discussion and future considerations.

Quality of Generated Research with AI

- Nature news feature: Is it OK for AI to write science papers?

- Can AI create high-quality, publishable research articles? (opinion piece by Research Information)

- Edith Cowan University - AI Data Management (creating a catalogue of your AI prompts for academic rigour)

- REFORMS: Consensus-based Recommendations for Machine-learning-based Science (discussion around the validity, reproducability, and generalisability of machine learning methods)

- Sakana.ai article about the first peer-reviewed scientific publication written by an AI

Risks of AI misuse

- MIT’s AI Risk Database – a living database of over 1,600 AI risks categorised by their cause and risk domain

- Charles Stuart University - Publication & AI (understanding the legal and ethical considerations when publishing with AI content)

- Suspected undeclared AI usage in academic literature

- The surge of AI content in research papers and its potential implications

- Times Higher Education article on research papers reviewed by AI

- Nature news feature on AI transforming peer review

Social and Ethical Impact

- A Personalized Ecology of AI (an article about the environmental impact of AI)

- Mistral AI's contribution to a global environmental standard for AI

- Data Science Institute – Ethical Artificial Intelligence (exploring trust and user behaviour in the design and use of AI)

- Modern Slavery and AI

- Impacts of AI on the environment (from the final report of the Australian Parliament’s committee on adopting artificial intelligence)

Governance

- NSW Government – Mandatory Ethical Principles for the use of AI

- AI Governance Snapshot Series written by the Human Technology Institute (HTI) at UTS

Coming Soon: Quick Check Guide for Commonly Used GenAI Tools

This guide is currently being reviewed and finalised by eResearch and the ODVCR. The list of tools was provided by recommendation from UTS academic and professional staff via the Use of AI in Research Working Groups as well as recommendations by word of mouth and email.

Once the guide is available we welcome feedback and updates provided by the UTS community to make sure that remains up-to-date and fit for purpose.

Other tools and resources to help you:

Did you know you can check the AI Incident Database to search and submit potential issues and incidents associated with AI tools?

You can also learn more about how Microsoft Defender 365 calculates the risk score for cloud based AI tools, noting that anything scored at 5 or below gets automatically flagged by our ITU team.

Compliance: Does your end user allow the use of AI?

Before using AI for your research needs, you should check that you are complying with the rules of whichever organisational body you wish to submit your work to.

Publishing

Some publishers now ask whether you've used AI in your work and how it was done. This varies across funders and publishers, so if unsure, seek assistance from the Research Office or the Library.

Charles Sturt University have created a guide around the use of GenAI in publications, including information on using AI in publications, grant applications and copyright considerations, and Edith Cowan University have created a guide on how to catalogue your AI prompts for good record management.

Did you know that the ARC advises caution when using GenAI to write funding applications, and prohibits assessors from using GenAI as part of their assessment activities?

Peer Review

Publishers have different rules on AI use in peer review. AI can assist with grammar, clarity, and compliance checks, but researchers must fully engage with the work to maintain trust and academic rigour. AI can only work on existing knowledge, not new research, highlighting the need for academic expertise and insight. AI can only work on what exists, not what is new, which is why we need academic expertise and insight.

Cybersecurity: Data privacy and IP protection checks

1 . What data is collected by the company?

Does the company keep a record of your prompts or your uploaded files? What personal information do they collect from you and your technology? How long is this data stored before it is destroyed? This information can typically be found under the Privacy Policy.

Did you know that some programs will keep screenshots of your interactions? Did you know that if you click “give feedback” companies will save prompts even if the default is not to?

Here’s a real example from an AI tool “...the transfer of Personal Information through the internet will carry its own inherent risks and we do not guarantee the security of your data transmitted through the internet. You make any such transfer at your own risk”

2 . Where is the data stored? Is the data used to train the AI model?

Some data is stored overseas and may not comply with the Australian Privacy Act, and it’s well known that some AI tools will use your data to train their model. This information can be typically found under the Privacy Policy.

3 . Who owns the IP?

Does the company claim ownership of, or assert IP ownership over, the prompts that you put into the AI tool? Does the company have IP ownership of the results that it gives you? If you wish to use the results from an AI tool but you do not own the IP, you will need to seek permission before its’ use. This information can typically be found under the Terms of Service.

Here’s a real example from an AI tool “You must not modify the paper or digital copies of any materials you have printed off or downloaded in any way, and you must not use any illustrations, photographs, video or audio sequences or any graphics separately from any accompanying text. You must not use any part of the content on our site for commercial purposes without obtaining a license to do so from us or our licensors.”

Another example from an AI tool “…the Company owns all worldwide right, title and interest, including all intellectual property and other proprietary rights, in and to the Service (including without limitation the Company’s proprietary classifier technology) and all usage and other data generated or collected by the Company in connection with the provision of the Service to users(collectively, the “Company Materials”)”

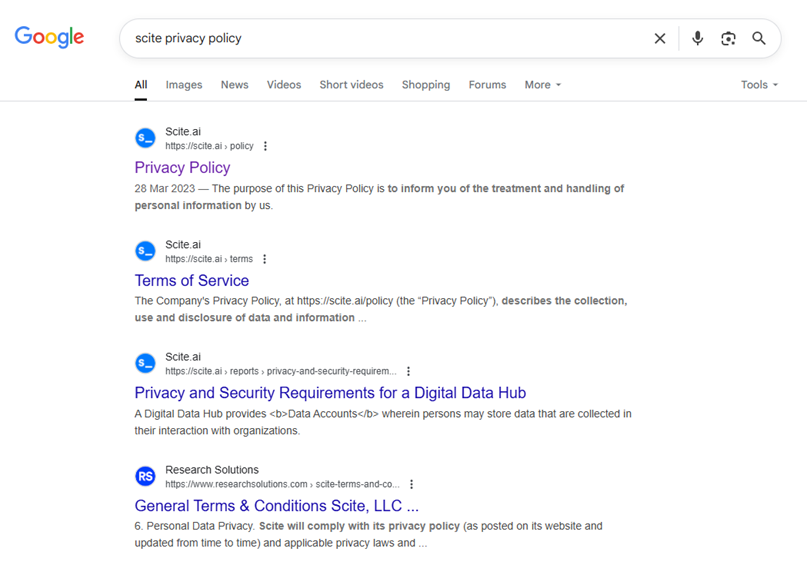

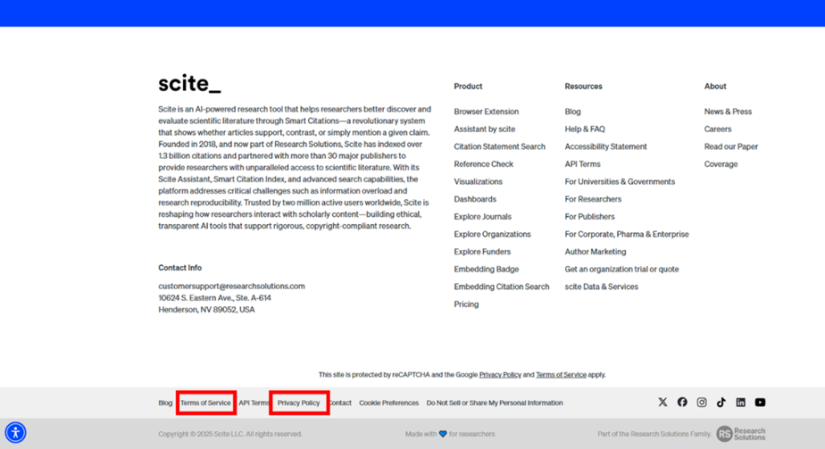

4 . How do I find the Privacy Policy and Terms of Service for my AI tool?

You can typically find the information you need by looking at both the Privacy Policy and the Terms of Service for any AI tool (you can typically Google search for these, or they are usually listed as part of the site map at the bottom of a webpage).

5. Is the tool high-risk?

ITU has created a list of AI tools that have been accessed on UTS devices that are considered as high-risk and should not be used by UTS staff. Alerts and notifications are being rolled out when UTS staff and students attempt to access high-risk cloud applications as identified on this list, with ITU providing updates via their Cybersecurity SharePoint site.

Please note that this list is not exhaustive and does not cover all AI tools, only tools that have been used on UTS registered systems. If an AI tool does not appear on this list, it does not mean that it is not potentially a high-risk tool and may require further investigation by ITU.

Copyright and Licensing: Do you have the rights to upload the data (including in prompts)?

Before uploading anything into an AI, ensure you have permission to do so. Although the Library allows UTS staff to download and read publications, it doesn't mean UTS can upload those articles into AI tools for summarisation or literature review. Different agreements with publishers may apply, so check with a Librarian if you are unsure.

For data from third parties, check the data licence or data sharing agreement (as per the Research Data Management Procedure) before uploading anything into AI.